Frequently Asked Questions

Most of the questions and answers have been copied from the forum thread. I will however update them over the next days and move everything here, since it's easier to maintain.

Do I need Private Proxies?

You don’t NEED them, but it makes things a lot faster and some people want to stay anonymous. Also, it is highly recommended to use proxies for everyone who has a VPS. Your account could get banned after your provider received too many complaints about the IP. However if you get complains is up to the way you use the tool. It's after all still a tool that can be used for good or bad. We are not responsible for what you do with it but strongly suggest you not to spam with it of course!

What are good proxy providers?

Do I need a VPS?

No! But note, usually you want to run the software 24/7 with a fast internet connection so you might consider getting one, especially if you have a slow internet connection.

What are good VPS providers?

What about Captchas?

A lot of people are using a software like Captcha Breaker and an online service like 2captcha.com. If you use Captcha Breaker and don't know what settings you should use please check our forum about that.

What should I consider when using email accounts?

Make sure the program can access the pop3 server for checking emails. Also check that you don't delete emails in your email client once it logs into the server. All emails should be in your inbox and none should be saved in “Spam/Junk”. You should check from time to time, if you’re email has been blacklisted and if that’s the case exchange it with a new one.

Why does GSA ignore my PR filters?

Uncheck “Also analyse and post to competitors backlinks” and “Use URLs from global sites lists if enabled”.

Can the software handle input text in other languages than English?

The software can handle all UTF-8 characters (which includes nearly all known characters). Before submission the content is encoded to a format which works for the site. Sometimes you will still see display errors, but that is a problem of the site that can’t handle encoding correctly

How can I post to .gov, .edu, .de, .es etc. only?

Go to “Skip sites where the following words appear in the URL/domain” and add an exclamation mark followed by the domain ending. So for posting only to .edu sites you’d add !.edu Please note to enter all “white listed” domains in one line like “!.edu !.gov !.gsa” and not one in each line.

Where can I find a decent badwords list?

You can put a badwords list together yourself using sites like Swearword Dictionary or you use lists posted by users, e.g. s4nt0s.

What are the different colors for?

- Green = submitting

- Blue= verification

- Orange = searching for new targets

- Red = something is wrong (not used for now)

How can I display the diagram?

Right-click on a project, select Show URLs → Submitted or Verified → Stats → Diagram.

Or with Right-click on a project → Show Diagram/Chart

How can I turn off the popups?

There are 2 Options that are causing popups. Follow these steps:

Project Options → If a form field can't be filled (like category) → Choose Random

Options → Captcha → Uncheck “Finally ask user if everything else fails (else the submission is skipped)”

I don’t want that GSA places my link at the end of the articles, how can I change that?

Insert <a href="%url%">%anchor_text%</a> at the place you want to have your URL + Anchortext or disable the option to place the link at the bottom (below article input field).

How to disable German and Polish engines?

Leave the respective fields blank and check “Uncheck Engines with empty fields”, or right-click on the field and choose “Disable Engines that use this”.

Is there a way to retrieve user account login details, e.g. account name + password of Web 2.0s?

Edit/Double Click Project → Tools → Export → Account Data

What is the difference between "Delete URL Cache" and "Delete History Cache"?

Delete Cache - Click this only if you have e.g. imported a wrong target URL list or think you want to clear the URLs that a previous search task found.

Delete History - Click this if you..

- a) want to submit to sites that you previously skipped (e.g. you didn't want to enter captchas back than but want to do now)

- b) think it found sites in the past that it skipped but are now supported by e.g. new added engines

- c) think it found sites in the past and somehow your internet connection blocked it to get sent to (proxy issue e.g.)

Can I use GSA for building links to my moneysite?

There are a lot of different opinions on that topic. If you set up the program correctly with strict filters, good content (best manual spun) and only for certain types of platforms, there shouldn’t be problems. Keep in mind that it always depends on the person who uses such a powerful tool like GSA.

Can I set the software to build backlinks in drip feed mode?

Project Options → Pause the project after XX submissions or verifications for XX minutes/days.

So you could setup to post 10 links a day with:

Pause project after 10 verifications for 1440 minutes/1 day.

How long does it take to verify my submissions?

It can take up to 5 days until a submission is checked. Be patient.

Why are there more verified than submitted links?

If a submitted URL timed out (to many unsuccessful checks) it will be removed, so the number of submitted links can decrease and is sometimes lower than the number of verified links.

The amount of submitted URLs in a project is increasing when:

- an account is created and awaiting verification (e.g. by email)

- a link is submitted and waiting verification

The amount of submitted URLs is decreasing when:

- a link is verified and actually live/visible for all → the amount of verified URLs is then increasing

- an account is verified and moved to the list target URL list

The amount of verified URLs is increasing when:

- a submitted URL is verified

The amount of verified URLs is decreasing when:

- automatic Re-Verification is happening and the link is no longer present

- automatic Re-Verification is happening on a tier project and the main project does no longer have the backlink present.

- automatic Re-Verification is happening on a main project and the backlink is no longer part of the project URL.

GSA suddenly finds a lot less new links to post to than the days before. Why is that?

Wait a few days to see if this is just a temporary problem. After that it most likely ran out of keywords. Try adding more keywords and choose some new search engines to scrape from.

Today I got XY submitted and YY verified... is this normal?

Ozz:

How should we know? When you are saying that you are 10 mph fast and asking if thats normal how should anyone know if you are driving a car, bicycle or a skateboard for instance.

There are so many factors that play a role. It’s impossible to give an answer to that question with so little information. We need to know the

Number of

- Projects

- URLs you are processing

- Search engines you selected

- Keywords you entered

Also, what

- types of sites you selected

- kind of filters you use (OBL, PR,…)

- is the Processor power of PC you are running on

- is the RAM of PC you are running on

- speed your internet connection has

And how long you already have been running the project.

I’m getting a lot of download failed errors. How can I fix it?

Check if GSA is blocked by a Firewall or Antivirus software. Another reason can be bad proxies, often public ones. Try without or choose other/private proxies to check if it works better then.

What placeholders are available for the fields?

- %NAME% - will be replaced with the a random users name

- %EMAIL% - will be replaced with the a random email (auto generated)

- %WEBSITE% - will be replaced with the users website url

- %BLOGURL% - will be replaced with the target url you are about to submit to

- %BLOGTITLE% - will be replaced with the page title of the target site

- %keyword% - uses random keyword from project settings

- %spinfile-% - takes a random line in that file

- %source_url% - referrer page

- %targeturl% - same as %blogurl%

- %targethost% - just the http://www.domain.com without a path

- %random_email% - same as %email%

- %random-[min]-[max]% - a random number between min and max

- %spinfolder-% - takes content of a random file in that folder

- %file-<[ile]% - takes content of that file

- %url_domain% - just the domain name of your url

- %url% - your url

- %?????% anything from the project input boxes e.g. %anchor_text% or %website_title% or %description_250% …

- %random_url% - inserts another URL from your project

- %random_anchor_text% - inserts another random anchor text.

- %random_sanchor_text% - inserts another random secondary anchor text

- %url1%, %url2% … - inserts the exact URL from your project.

special variables for blogs:

- %meta_keyword% - takes a keyword from target meta tags

- %blog_title% - same as %blogtitle%

special variables for image commenting:

- %image_title% - the name of the image you comment on (sometimes it is sadly the filename only as some webmasters don't set a title)

A more complete list can be found in the macro guide.

How can I add new engines without updating the whole software?

Right-click on a running Project → Modify Project → Use New Engines

How can I backup my projects?

Select the Projects you want to backup → Right-click → Modify → Backup

Can I run two instances of my copy at the same time?

No. Please support the developers and buy another version. It is already very cheap, some would say too cheap, so don’t try to do some shady tricks.

Is GSA better than Scrapebox?

This question can’t be answered, because both tools are different in most parts. Scrapebox is basically a Link Harvester + Blog Commenter and GSA Search Engine Ranker is an Automatic Backlinking tool with lots of different backlink sources. A good idea is to combine the strengths of both tools.

How to combine Scrapebox and GSA?

You can use Scrapebox for harvesting URLs with GSA footprints. I extracted the footprints with the tool posted by s4nt0s. Put in your keyword list into Scrapebox → click on the “M” → load the list with GSA footprints. Now you can harvest the URLs with Scrapebox. After that you can use the Scrapebox filter functions, like “Remove duplicate URLs” and filter for PR. Now you have your own list, that you can use with GSA. See next Q&A.

Addition: You can scrape for a specific platform by:

- Using the platform footprint in GSA SER:

- Options > Advanced > Tools > Search Online for URLs > [Select Platform]

- Add the footprints into Scrapbox, and scrape. Then just import those target URLs into GSA SER, and it will use these to post to.

How can I use GSA as posting tool for my own list?

Uncheck all search engines, uncheck “Also analyse and post to competitors backlinks”, uncheck “Use URLs from global sites lists if enabled”. Right-click on the project and click “Import Target URLs”.

I'm not getting the expected results. Can someone help me?

“If you want to get some good feedback and assistance in this forum you need to have a detailed post with your issues. First you need to search this forum and ensure your question is not answered. Go to Google and type in: site:forum.gsa-online.de keyword If that doesn't help: Take screenshots, list all your settings. Experienced users will take a quick look at your screenshots and give you some basic ideas where you're going wrong. Copy this sample post: https://forum.gsa-online.de/discussion/1440/identify-low-performance-factor#Item_5

How to post Full Article on WIKI?

edit the project→click Options→go to Filter URLs→Type of Backlinks to create→enable Article-Wiki. However this is not recommended and considered as spam. Don't use it except you know what you are doing.

Why do I see so many none related Anchor texts that I did not define/do not want?

- a) You use generic anchors in project data → uncheck it

- b) You use domain anchors in project data → uncheck it

- c) Some engines simply use there own anchors like “Homepage of Author” → right click on engines→uncheck engines not using your anchor texts

- d) Some engines use Names instead of Anchor Texts or Generic Anchors like Blog Comments (see a or disable Blog Comments/Image Comments)

Why do my top anchor texts come up with the URL in front?

The Trackback Format2 engine is producing an anchor text like ”Anchor Text - www.yoursite.com“. Just disable this one if you do not want it.

How to export backlinks for today only?

- Right click on your project → Show URLs → Verified URLs

- Right click on the list → Select → By Date

- Click on the EXPORT button or simply right click again and choose Copy URL

What do all the different colors mean in the Show Verified URLs dialog?

This dialog is reachable via right click on a project → Show URLs → Verified URLs. Here you can reverify links or check the index status which then result in different colors. *

- green background → successfully verified

- red background → verification failed

- yellow background → verification failed but with a timeout, means the server might be down temorarry

- green text color → index check successfully finished and URL is indexed

- red text color → index check successfully finished and URL is NOT indexed

- yellow text color → index check failed due to a possible ban on the search engine with that IP/Proxy

When asked to enter a mask, what syntax is accepted?

- Simple words like string and the selection has to have this word included, but will not match e.g. stringlist

- If you want to match also parts of words, use *string*. The sign “*” stands for any or none chars.

- If you want to match against multiple masks use “|” as a separator such as string1|string2|…. The “|” sign works as an “OR” operator.

When viewing the submitted URLs from a Project, I see many with "awaiting account verification". What does that mean?

It means that an account was created on that site with an email address. That however needs to be verified by a link that was sent to you via email. The project is now checking your email in intervals and hopes to see such email message to continue with the verification followed with login and link placement.

One of the following might have happened:

- The website is simply no longer working and the email was not sent

- Your email account does no longer work (e.g. you need to manually verify your email account → check the accounts in project options)

- The message sent was tagged as spam and didn't reach your inbox. Manually login to that email account via web and check)

- The message simply delayed and comes a lot later (can happen for manually verification by site admins but is not very common)

- The previous registration attempt on that site actually failed but the program was unable to see that error and thought it was successful

If there have been a lot of tries to verify that account it will simply ignore that site and remove it from the submitted URLs list. It will decrease without further tries to submit.

What does a re-verification in project options do?

All verified links of a project get checked for:

- the webpage is is still valid (HTTP 200 as reply code)

- the placed backlink to the URL of that project is still valid and present

- for a tier project it is also checking if the placed backlink URL is actually still part of the main project's verified URLs

What should I do on a message such as "No targets to post to (...)"?

First, this is not an error, just a warning that at that current state of the project running, it is not getting enough URLs to place links on (Target URLs). The reason of this can be many and in brackets of that message are some hints that might help to find the issue. Most likely it's about proxies not being configured correctly or email accounts not working or to strict filters.

If you still can't figure it out, please use pastebin.com to upload some log lines from that project and discuss this on our forum.

I got a lot of submitted URLs but very little verified. Is this normal?

That depends on your setup. If you are using “Wordpress” and “Drupal” engines, then it is indeed kind of normal. There are a lot sites based on these two engines and registration is usually easy and allowed. So each registration results in one submitted entry in your project. Later if it is able to verify the account, it tries to login and even that usually works very well. But the submission of articles is not something that many sites will allow. In the end just a few verified URLs will get build from all the submitted. This is something that can not be easily seen when a site is identified and the account registration starts. So either you have a list of sites from somewhere else where this is pre-checked or have to live with this submitted vs verified amount of sites.

What does "no engine matches" mean?

It's often seen in the log window when the program tries to submit to certain sites. But in order to submit sites, it is crucial for the program to identify it's system or engine behind it, the one it was build on/from. Sometimes it happens that this is still unknown and aborted soon after with an error message such as:

[xx:xx] no engine matches - <url> [..]

This can even happen if the program found sites by internal footprints in search engines or when using site lists. A site can change, has error messages or simply changed it's structure. In such cases the program doesn't know how to continue or what script to use to submit to that site.

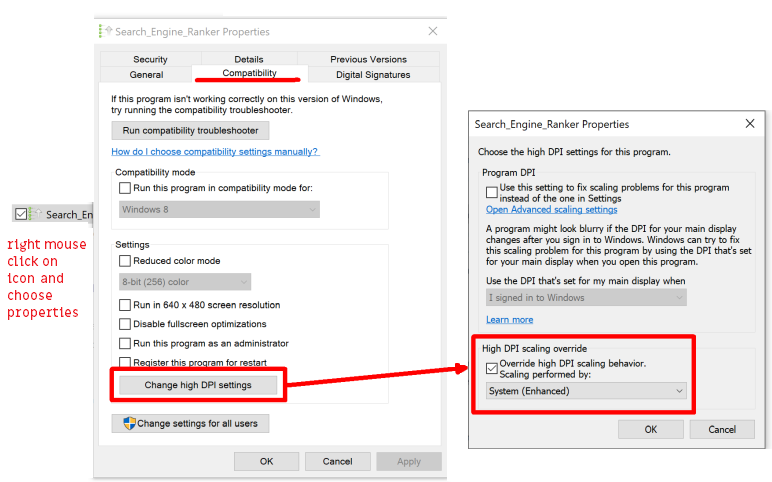

The GUI is so tiny that I can hardly read anything. How to fix this?

This is unfortunately a problem with very high dpi or font settings. In most cases it would be best to just change back to 96 dpi settings. This is however not a good idea when running on a very high resolution (4k or higher).

If you running Windows 10, try the following:

Right click on the program's icon and choose “Properties” (usually the last entry on popup menu). Then click on the “Compatibility” tab followed by clicking on “Change high DPI settings”. On that new form enable “High DPI scaling override” with “System Enhanced”.

Just my thoughts on this: I see really no point in buying a 4k monitor, using a very high resolution and telling windows to scale everything up as again. You could as well just use a lower resolution and save a lot resources (CPU/GPU cycles, processing power,…). Just think about it.

How can I skip NoFollow/DoFollow links?

You can of course skip that in two different ways when editing the project:

- right click on the very left box with the engine/platform selection and choose “Uncheck Engines with No/Do-Follow Links”

- for engines that build both types, try also the project option called “Try to skip creating Nofollow links” (can be changed to Try to skip creating Dofollow links).

However, keep in mind that nofollow links are also important. It looks very suspicious to just have dofollow links. More about it on our forum.

How to submit content to my own sites?

Of course you can submit to sites where you have an account already (login and/or email, password). Just follow these little steps:

- Create a file with the content such as:

URL:login:password

URL2:login:password

URL3:login:password

… - Right mouse click on the project and choose Modify Project → Import → Account Data → …

- When asked to import them as Target URLs, answer YES.

Ones you start this project, it will try to login and post the content already defined in the project. Please make sure the engines/platforms are enabled in project settings that imported accounts are based on.

Why do I get no or very few PingBack backlinks?

This method is hardly working as you must have placed a link on your page to the target site. There are ways to do this by submitting a special URL with a parameter, but I'm not going into details about it. Simply uncheck this engine if you are not doing some tricky things.

I have a backlinks created with another product. Can I use them and build links to it?

Yes of course. If the backlinks are pointing to an URL present in one of your projects data, then right click on this one and choose Show URLs → Verified URLs.

In that dialog you can again right click in the box and import your URLs as verified. You can also resolve anchor texts and detect the used platform afterwards.

How many search queries are performed before rotating the proxy?

It's actually working differently. It chooses one proxy and uses that for that given search query and does the search with this proxy only until all pages are parsed. This is less suspicious for the search engine as the same IP is seen for all the different pages. Also same cookies and the user agent is used. It waits a set time between the searches to not get banned on this IP/proxy. This also means that the more proxies you have, the more simultaneous searches can be performed at a time (only limited by the amount of free thread slots).