This is an old revision of the document!

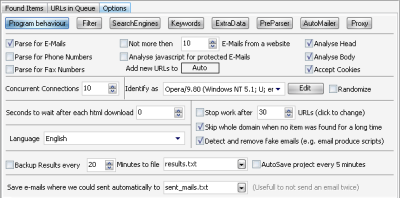

Options - Program behavior

Not more then XX E-Mails from same host

This will only extract so many e-mails from one website in order to not grab a high number of e-mails who possibly have nothing to do with your entered search-keyword but are listed there in addition. Please note that this counter is reset on each new URL from that host.

Analyze javascript for protected E-Mails

Some sites use javascript to hide there data from parsing programs. If you enable this option, then the program will try to analyze the javascript and extract the data anyway. This however doesn't work always but can safely be left enabled.

Analyze Head/Body

The downloaded html page will only be analyzed for the chosen parts. A web page consists of mainly 2 parts. A head where the web page is described and the actual content (body). In most cases you want to analyse them all.

Accept Cookies

When parsing websites, some servers store certain settings on the clients machine. This can be various things like the search keyword or a page number. It is a good idea to let this enabled. You only have to disable this in special cases where e.g. a server identifies you on a set cookie and then doesn't allow more than XX downloads an hour.

Add URLs to

When a new URL is found that should be parsed, it is put to the “URLs in Queue” box. You can define where the program should put it.

Concurrent Connections

This will define how many pages should be analyzed simultaneously. Please set this option with care. We had good results with a value of five. To high numbers will result in to much memory usage and an in-stable system.

Identify as

Each browser identifies itself to a webserver with a special string when it downloads a website. If you use e.g. Internet Explorer, it is known by the webserver and in some cases it shows you a different site as when you use the Opera Browser or FireFox. You can also add new Browser-Identifications here when pressing “Edit” and let the program choose a random one when you check the “Random” box. However leave it to the default setting and it should be good in most cases.

Stop work after XX minutes/URL/Items

Normally the program will run until there is nothing in the “URLs in Queue” left. In some cases it would be enough if you e.g. got one E-Mail from an URL or if you have parsed 100 URLs or if you have already parsed for like 60 minutes.

Seconds to wait after each Download

Set this to e.g. 1 to slow down the CPU usage if you have problems with it.

Backup Results every XX Minutes to file YY

In case your system is kinda in-stable it would be good to save the results of the project to a file automatically so that you don't have to restart the whole progress again.

Save e-mails where we could sent automatically to.

If you make use of the Automailer, then you probably don't want to send the e-mails twice. This option collects all successfully send e-mails to one file. The program will check new e-mails against this list and will skip sending them if they are in it. If you plan to send e-mails to the same people again, you have to either delete them from this list or change the name of the file. A restart of the program might be required.

Skip whole domain when no item was found for a long time

When you are parsing an URL with a lot sublinks and no Item was found for like 100 links, then the all URL with the same domain get removed from the Queue.

Detect and remove fake emails (e.g. email produce scripts)

A quick test is made if the email domain really exists and not some fake email was generated by a script.