meta data for this page

Options

The software comes with a lot of options that should be suitable for all tasks.

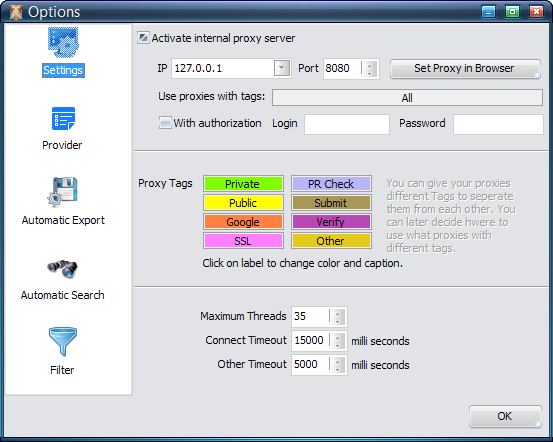

Settings

Internal Proxy Server

On this screen you can setup your own internal proxy server. This will allow you to use the software with every other software that allows you to enter a proxy. By default the proxy is running on local host (127.0.0.1) and port 8080. You can of course change this to your needs and also add an authorization (login/password).

Once an application (e.g. your Browser) is making use of this proxy server, GSA Proxy Scraper will use any of the proxies in random order. Each new request to the proxy is using a new proxy. If you plan not to use any proxy, you can also choose which proxies to include by specifying the TAGs a proxy must have to be used here.

The option Add a Fake IP for Transparent Proxies in Headers will try to add another fake IP in HTTP Headers for transparent proxies in order to confuse remote web server on what the real IP is. Usually scripts take the first IP submitted within HTTP Headers only. So there is a good chance that your transparent proxies are not that transparent anymore but work just as an anonymous one. However, your real IP is still submitted with every request, just that there is a good chance that the fake IP gets used to identify you.

Proxy Tags

A proxy tag is something like a property you give a proxy. This makes is easy for you to group proxies in a maximum of 8 categories. Some of the tags are also set automatically by the proxy testing (Bing, Google, SSL…).

You can change the name and colour of it by clicking on it.

Proxy Timeouts / Threads

At the bottom you can define how to test the proxies and how fast. There are two types, connect timeout and the timeout when being connected and receiving data from it. If you choose a low timeout, the slower proxies will not pass, which will leave you with only the faster proxies.

The number of threads define how many parallel searches/tests the program will perform.

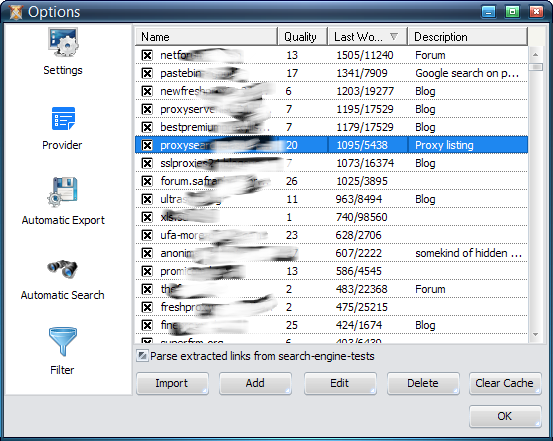

Provider

A website that offers free proxy servers is called a provider within GSA Proxy Scraper. The software comes with over 800 providers. Having them checked in the list means that the automatic searching mode will parse that provider. The maximum quality a provider can have is 100 which would mean that all extracted proxies do work. That however is very unlikely. The Last Working column shows the number of working proxies that were extracted from that source during the last time it was parsed. As a description you can enter whatever you want.

There is another option to proxies called “Parse extracted links from search-engine-tests”. This option will use a proxy as search query in certain tests (Google Search, Yahoo, Bing,…) and will save every extracted link on the result page. When having this option checked, it will parse all those links for new proxies. Often new proxy sources are found that way that you can later add here as well. In the proxy list it is shown as “Proxy-Search Links - …”.

Another option called “Use Search Engines to locate proxy lists” will activly use search engines (google, bing…) with your queries (Other) or the found IPs itself to find new proxies.

Under the list you have various options to manage the providers by adding new one's or deleting some no longer working providers.

- Import - Will open a popup menu where you can import various proxy sources from other programs

- Add - Will let you add proxy sources in different ways

- Edit - Will open the editor to fine tune the options for each provider

- Delete - Will delete all selected providers

- Clear Cache - This will delete all the cache files for the provider. Cache files hold information about proxies being extracted from the sources and found as being dead so further proxy crawling will no longer waste time on that. Clearing the cache might be useful only for special cases where all proxies had been tagged as being down due to network errors.

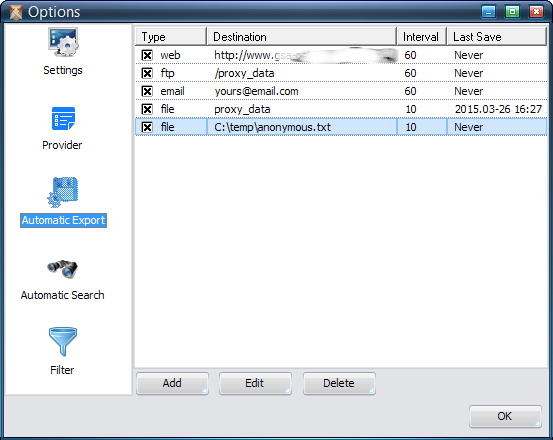

Automatic Export

There are many options for you to export proxies automatically. You can define an interval and different ways to export.

- File - Exports proxies to a file

- FTP - Uploads a file to a FTP Server

- E-Mail - Sends an E-Mail with an attached file with proxies in it

- WEB Upload - This sends proxies to a webserver. You can define everything on how this should happen (POST/GET). Also see this sample script in php to accept uploads.

You can of course Edit or Delete your previously setup export options here. Having it checked in the list means it is used on automatic export, otherwise it can still be used in the Export Toolbar.

Each Export offers many different filters to be used. If you plan to create an export for GScraper (a famous tool for search engine parsing) you must make sure that only proxies are exported having an IP (filter option Exclude proxies with a domain) as GScraper will not import anything if there is a domain in it.

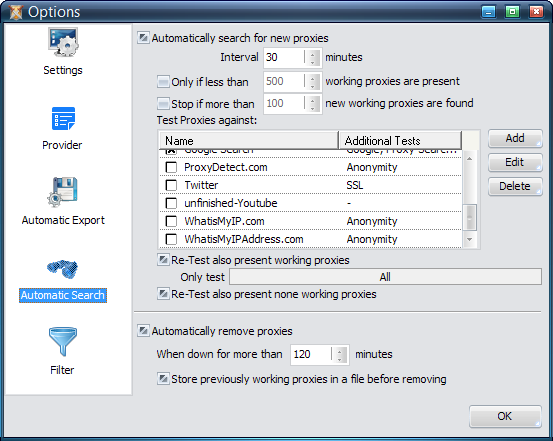

Automatic Search

Having this checked means the program will search all the providers for new proxies. You can define the Interval here as well as the conditions on when this should happen or stop.

The box with the different Tests is in fact very important. Usually people need proxies to be anonymous, you you should pick some tests here that offer this. Also the Google Search test might be of interest for you. Other tests are also available like StopForumSpam or tests if a proxy works with facebook.com.

Of course you can add your own tests here clicking the Add button or Delete the tests that are not of interest for you.

Each found proxy is tested against the selected tests. The option Re-Test also present working proxies should be checked to constantly test the proxies even if they have been found to be OK before. Public proxies are unfortunately very unstable and often go on/off. So it is important to always Re-Test them.

In case such a proxy is down, it might come back later on. For that situation you can turn on the option Re-Test also present, none working proxies.

Anyway it is not suitable to keep dead proxies forever in the hope that they will work once again. You should not disable the option Automatically remove proxies in order to not wast memory and other resources.

The option to store removed proxies in an file enables you to test them all again if you want to go back and try that at a later time. That's possible by clicking Add → Previously Removed on the main interface.

Filter

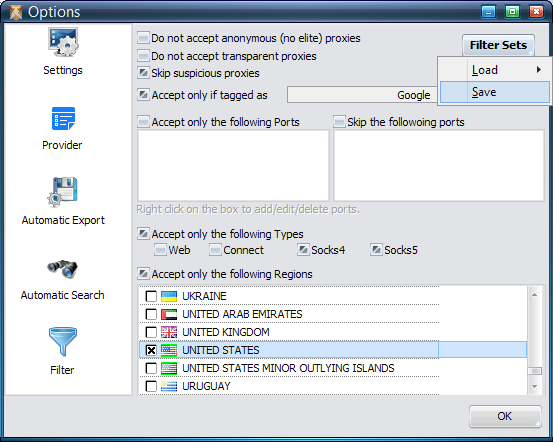

I don't recommend you to use any of the filter options as you can always define filters for the automatic exports. However for those who want this, the following options are available:

- Do not accept anonymous (no elite) proxies: This is not keeping proxies who identify them as such but not telling the remote website what IP you have.

- Do not accept transparent proxies: This is not keeping proxies who identify them as such AND tell the remote website what IP you have.

- Skip suspicious proxies: Suspicious proxies are those who probably spy on your activities on the proxy server itself. Keep in mind that many of the proxy servers you find are run by spy agencies. Anyway even if a proxy is not tagged as such, it can still spy on you. You can not trust anyone at all these days.

- Accept only if tagged as: Keep proxies only if they match a certain test and that test tagged the proxy as such.

- Accept only the following ports OR Skip the following ports: This can be useful if your firewall does not allow you to go online by certain ports or if you simply need proxies from a specific port.

- Skip duplicate IPs: Often you have proxies being on the same IP/Host but with different ports. This can be a problem if you have too many and do requests to search engines who only see the IP and check against that to see if you are hammering it.

- Accept only the following Types: There are different types of proxies. WEB proxies are those that work like a normal http protocol but with a bit of a modification on the request header. They are only useful for web queries. Then you have Connect proxies, socks4 and socks5 who can basically be used for any purpose, not just website parsing.

- Accept only the following Regions: Proxies and there location on Earth are determined by a small database. In some cases it is required to just take proxies from a special location which can be setup here.